Automation Code Creation with ollama

My review on using ollama to help create code for Automation

“By harnessing the power of AI, I simplify my coding workflow, freeing up time to focus on creating blog posts that resonate with the vCommunity. With the help of AI, my writing becomes more precise, more creative, and more impactful.” - Dale Hassinger

ollama

ollama Web Site Link:

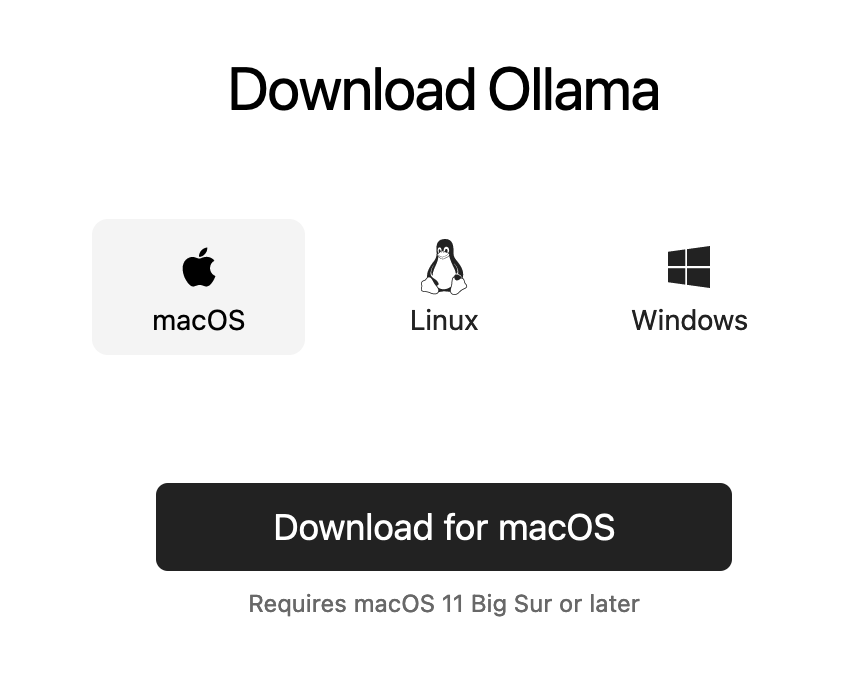

Building on my experience with ChatGPT, I decided to explore Ollama Local on my trusty Apple MacBook Pro M1. As a user-friendly AI enthusiast, I was eager to see how seamless the installation process would be. To my delight, setting up Ollama Local on Mac is incredibly straightforward.

To get started, simply head to the Ollama website and download the application as a zip file. Expand the contents of the archive and copy the Ollama Application file into your Applications folder. Double-clicking the icon will launch the app in no time – it’s that easy!

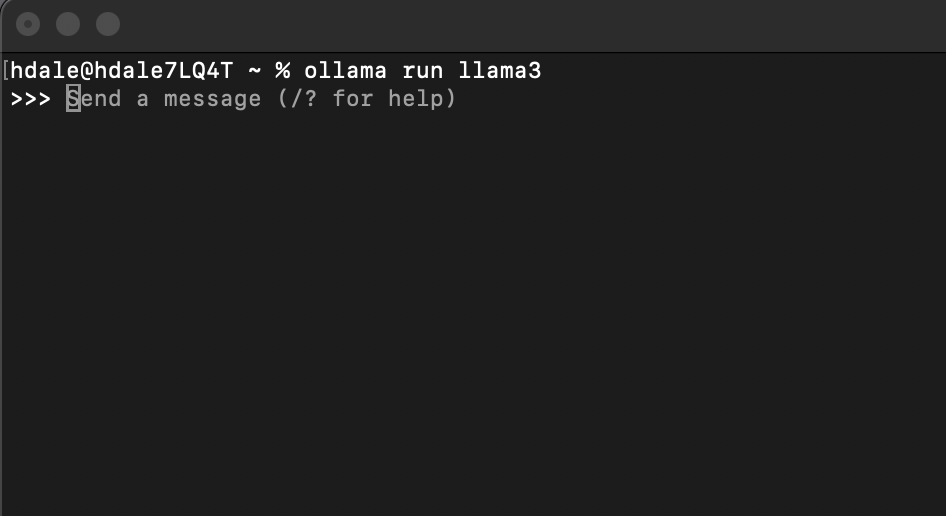

For this review, I’ll be using the Llama3 Large Language Model (LLM) to demonstrate Ollama Local’s capabilities. The first time you run the application, it will automatically download the necessary LLM files for you. Like I said, very easy to use.

With Ollama Local up and running, let’s dive into its features and see what kind of AI-powered magic it can create.

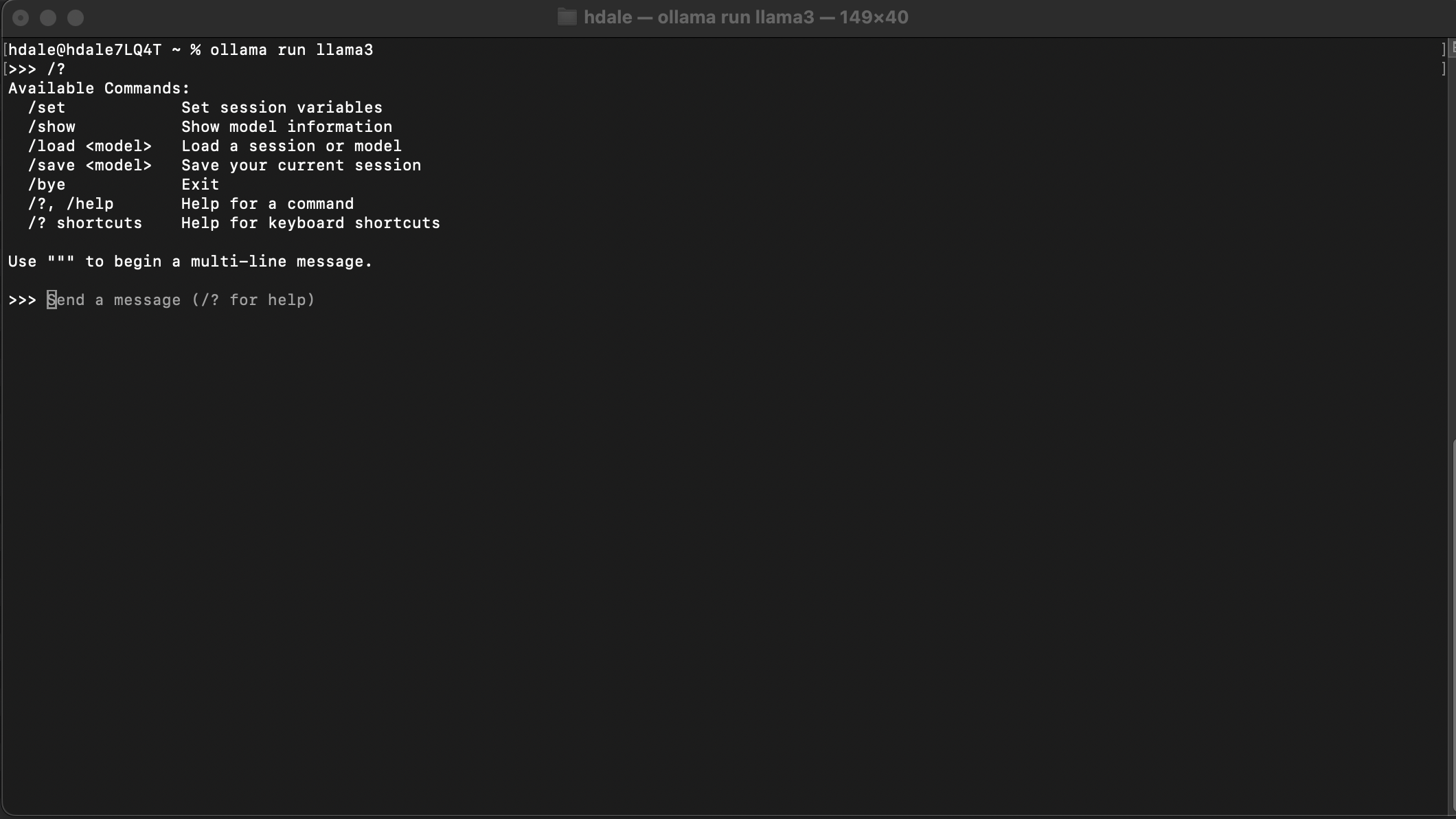

Go to a terminal and type “ollama run llama3” to get started.

Click arrow to expand the code:

|

|

ollama Models:

There are many models to pick from to use with ollama…

Once you’ve chosen the desired model, the web site will provide you with the necessary command prompts to get started. No need to dig through complex documentation or struggle with unfamiliar code - just follow the straightforward instructions and you’re good to go!

How to run ollama from cli:

Here is a quick video to show how to get started from the CLI

- Run command to start ollama

- Ask a question and see the results

- type /bye to exit ollama

Hands-on experience with this tool? A breeze! Not only is it incredibly user-friendly, but the performance on my laptop has been impressive too. And what really stands out is the speed at which responses are delivered - no waiting around here! The unedited video itself is a testament to its fast processing capabilities.

Click arrow to expand the code:

|

|

ollama “Real World” Examples:

Now I will show some examples of how I use ollama everyday for coding and writing. They are my main uses cases for AI.

ollama PowerShell Function

In my previous blogs, I’ve highlighted the versatility of PowerShell in automating various tasks. In this blog, I’ll show how to harness the power of PowerShell to interact with AI assistants like Ollama. To start, I created a custom function that enables you to ask Ollama questions directly from the PowerShell command line. Here’s the sample code to get you started:

Click arrow to expand the code:

|

|

If you are a PowerShell enthusiast, you’re probably accustomed to the flexibility of functions within your scripts. Feel free to adapt this example to your own workflow and coding style, regardless of whether you prefer to use it as is or modify it to fit your needs.

ollama Prompt Engineering Examples:

Set the ‘Role’ to start:

One of my habits when working with ollama, or ChatGPT, is setting a specific ‘role’ for our conversations. By defining a clear context or persona, I’ve found that I’m able to receive more effective and insightful responses from the AI. This approach has been refined over time through experimentation and exploration of the product’s capabilities, yielding better results and a more productive dialogue.

- Act as a PowerShell SME

- Act as a PowerCLI SME

- Act as a VMware SME

- Act as a vRealize SME

- Act as a Javascript SME to work with VMware Orchestrator

- Act as a Technology Blogger

Questions to generate code:

- PowerCLI:

- Act as a PowerCLI SME

- Create a script to connect to a VMware vCenter. username is administrator@vsphere.local. password is Password123. vCenter name is vcenter8x.corp.local. List all VMs that have a snap. Export the list of VMs with a snap to c:\reports\vms-with-snaps.csv.

Unedited Code Returned:

Click arrow to expand the code:

|

|

Code questions that I have used:

- Convert this curl command to PowerShell

- Create a html file that does… Amazing how advanced the code will be for a complete web page. Include a table that I can sort the columns, select the number of rows and have a search within the table. Awesome!

- Convert this Python to PowerShell or convert this PowerShell to Python

- Javascript that can be used with VMware Orchestrator Actions and Workflows

- SQL commands

- Linux commands

- vi and nano help

- salt state files

Questions to help with writing:

- My writing process in commands:

- Act as a Technology Blogger

- Reword “Paste what I wrote within double quotes”

- See what the results look like. If I want a second example I will type:

- “again” or

- “another example”

- For my writing style I will use this command a lot:

- use less adjectives

Data for LLama3 is up to the year 2022. When asking VMware Aria questions I will still use vRealize a lot because of the name change.

- Other writing example questions:

- I want to create a Technology presentation description “Topic VMware vRealize Operations with a focus on Dashboards”

ollama commands:

Two commands I use the most:

- “ollama run llama3” to start ollama

- “/bye” to exit ollama

Commonsense Rules:

Rules I use when working with AI/LLMs:

- Never use any code generated in a production environment without testing in a lab first

- Always understand the code that is generated before even using in a lab

- Read the text that is generated before using

- Understand that AI/LLMs are to help you, not do the work for you.

- You are responsible for anything generated that you use.

Lessons Learned:

- ollama and llama3 are both available at no cost today (04/2024).

- I pay $20 per month for ChatGPT. You get more with ChatGPT but ollama does a good job for a way to have a local LLM option.

Use Cases to use ollama local:

- Traveling and no internet access for AI like ChatGPT.

- Use ollama while flying. Nice way to get some help at 35,000 ft.

- Go to your family cabin off the grid and still use ollama on the MacBook.

- Off the grid camping.

- Basically, if the laptop has power, you have access to ollama AI, which is very cool in my mind. It is awesome to have the power of AI at your fingertips and not require the power of the internet to use it.

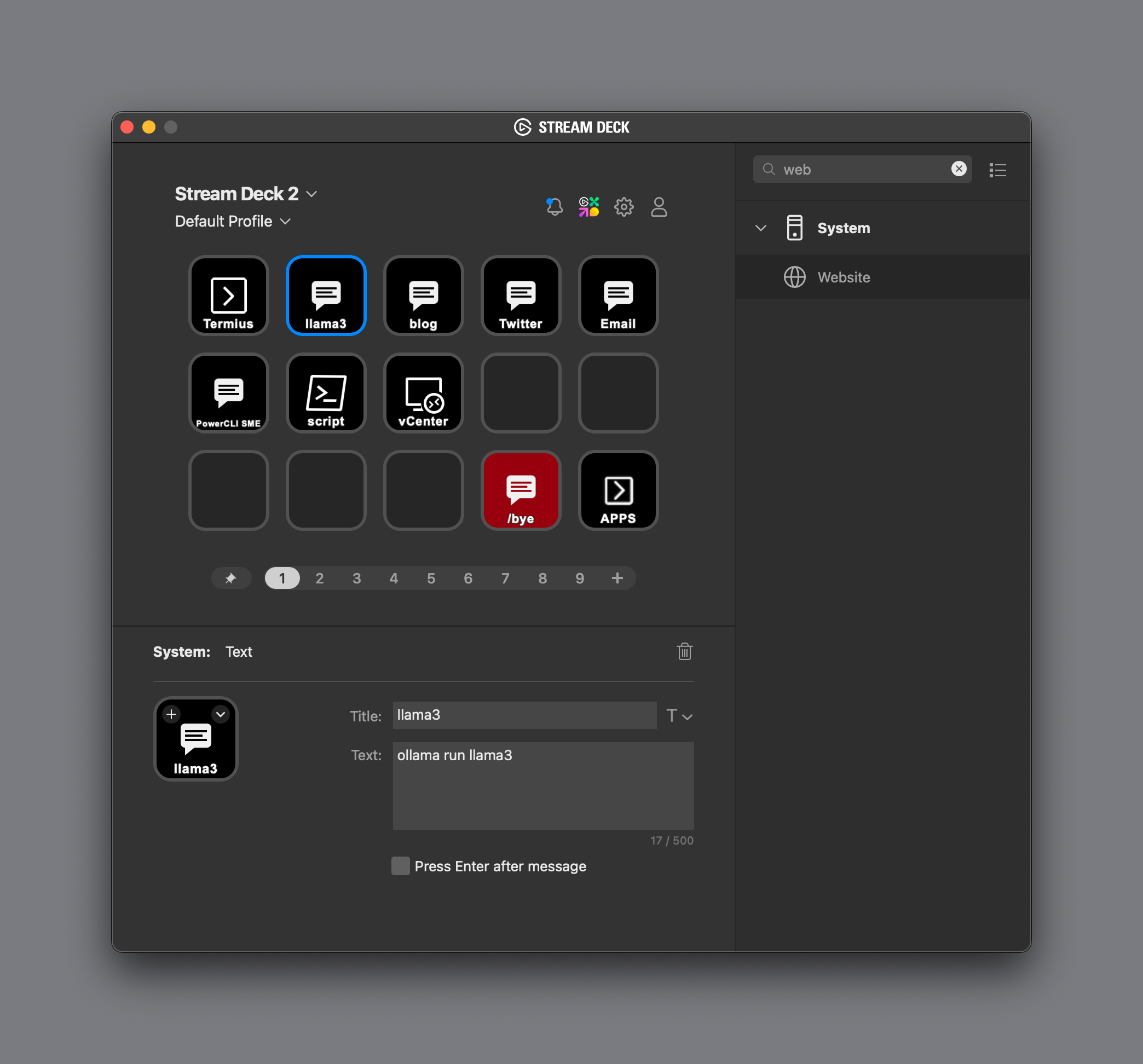

Stream Deck and ollama commands together is a great time saver.

- I assign commands I use the most with ollama to buttons.

- When I have a prompt that works well, assigning the prompt to a stream deck button makes it easy to always run the command the same way. I should call them Prompt Engineering Buttons.

In this video, the ollama commands were run using a Stream Deck Button. Realtime speed, no video edits:

Links to resources discussed is this Blog Post:

Product Versions used for Blog Post:

- ollama: 0.1.32 | Use this command at cli to show ollama version “ollama -v”

- LLM: llama3

DO NOT EVER USE ANY CODE FROM A BLOG IN A PRODUCTION ENVIRONMENT! PLEASE TEST ANY CODE IN THIS BLOG IN A LAB!

- If you found this blog article helpful and it assisted you, consider buying me a coffee to kickstart my day.